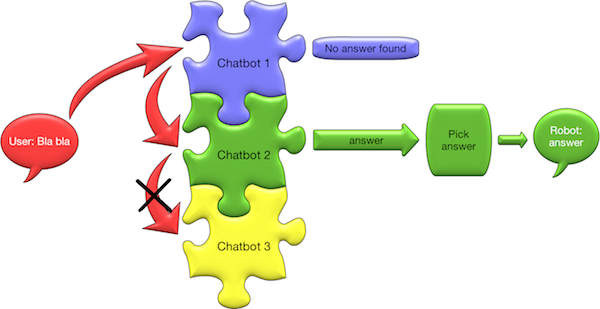

Goal - Give Pepper the ability to chat with a user by interrogating one or several chatbots and select the most appropriate answer given the priorities of the chatbots.

// Create a QiChatbot from a topic list.

val qiChatbot: QiChatbot = QiChatbotBuilder.with(qiContext)

.withTopics(topics)

.build()

// Create a custom chatbot.

val myChatbot: Chatbot = MyChatbot(qiContext)

// Build the action.

val chat: Chat = ChatBuilder.with(qiContext)

.withChatbot(qiChatbot, myChatbot)

.build()

// Run the action asynchronously.

chat.async().run()

// Create a QiChatbot from a topic list.

QiChatbot qiChatbot = QiChatbotBuilder.with(qiContext)

.withTopics(topics)

.build();

// Create a custom chatbot.

Chatbot myChatbot = new MyChatbot(qiContext);

// Build the action.

Chat chat = ChatBuilder.with(qiContext)

.withChatbot(qiChatbot, myChatbot)

.build();

// Run the action asynchronously.

chat.async().run();

Typical usage - You want Pepper to have an elaborate conversation with someone.

To build a Chat, use one or several Chatbots.

The Chat action takes care of speech recognition and the feedbacks.

In order to get an answer, it calls each Chatbot sequentially and picks one,

according to his priority rules.

For further information you can check: Chatbot.

No chatbot can understand and have a good answer for every user input but most people think that the robot can answer to anything. Instead of trying to make a unique chatbot answer to everything and re-develop already existing content such as Wikipedia, weather and so on, we think that combining different already existing chatbots that perform well in their domain could be a solution.

QiChat allows you to develop language interaction for the robot but there are many other frameworks that can do it (Dialogflow, Botfuel, Microsoft Bot Framework) and could be used for the robot.

Things such as speech recognition, text to speech, robot feedback might be difficult to make the robot behavior consistent.

The order of chatbots within the list determines the order in which the chat asks them for an

answer and picks one of them depending of the Reply priority of the reply.

The chat selects the first ReplyReaction which has the reply priority “Normal”.

If there is no ReplyReaction with a “Normal” priority then the first ReplyReaction

with the “Fallback” priorities is picked.

Listening

To be understood, the user should only speak to the robot when the Chat

is in a listening state.

The listening state of the Chat is accessible at any time, via the

getListening method:

val isListening: Boolean = chat.listening

Boolean isListening = chat.getListening();

And a listener:

chat.addOnListeningChangedListener { listening ->

// Called when the listening state changes.

}

chat.addOnListeningChangedListener(listening -> {

// Called when the listening state changes.

});

Hearing

You can be notified when the robot hears human voice via the getHearing method:

val isHearing: Boolean = chat.hearing

Boolean isHearing = chat.getHearing();

And a listener:

chat.addOnHearingChangedListener { hearing ->

// Called when the hearing state changes.

}

chat.addOnHearingChangedListener(hearing -> {

// Called when the hearing state changes.

});

Saying

The phrase the robot is saying is accessible via the getSaying method:

val saying: Phrase? = chat.saying

Phrase saying = chat.getSaying();

And a listener:

chat.addOnSayingChangedListener { sayingPhrase ->

// Called when the robot speaks.

}

chat.addOnSayingChangedListener(sayingPhrase -> {

// Called when the robot speaks.

});

When the robot is not saying anything, the String contained in the Phrase is empty.

You can observe what the robot hears via a listener:

chat.addOnHeardListener { heardPhrase ->

// Called when a phrase was recognized.

}

chat.addOnHeardListener(heardPhrase -> {

// Called when a phrase was recognized.

});

Sometimes the robot detects human voice but cannot determine the content of the phrase said:

chat.addOnNoPhraseRecognizedListener {

// Called when no phrase was recognized.

}

chat.addOnNoPhraseRecognizedListener {

// Called when no phrase was recognized.

}

After the robot heard human speech, there can be 3 different cases:

Normal reply

The type of the reply is normal when the robot provides an answer provided by any rule except fallback rules. It is accessible via a listener:

chat.addOnNormalReplyFoundForListener { input ->

// Called when the reply has a normal type.

}

chat.addOnNormalReplyFoundForListener(input -> {

// Called when the reply has a normal type.

});

Fallback reply

The type of the reply is fallback when a phrase was heard but the robot cannot

provide a good answer, for example when a QiChatbot matches a rule containing a e:Dialog/NotUnderstood.

In the case where the robot determined the content of the phrase said,

the input parameter will contain the phrase, otherwise, it will be empty.

chat.addOnFallbackReplyFoundForListener { input ->

// Called when the reply has a fallback type.

}

chat.addOnFallbackReplyFoundForListener(input -> {

// Called when the reply has a fallback type.

});

No reply

If the user input doesn’t match any rule and there is no fallback reply, the robot will not reply.

In the case where the robot determined the content of the phrase said, the input

parameter will contain the phrase, otherwise, it will be empty.

chat.addOnNoReplyFoundForListener { input ->

// Called when no reply was found for the input.

}

chat.addOnNoReplyFoundForListener(input -> {

// Called when no reply was found for the input.

});

By default, Pepper does not stay motionless while listening, he moves slightly, in order to let you know he is listening.

If necessary, you can disable the body language:

val chat: Chat = ...

chat.listeningBodyLanguage = BodyLanguageOption.DISABLED

chat.async().run()

Chat chat = ...;

chat.setListeningBodyLanguage(BodyLanguageOption.DISABLED);

chat.async().run();

See also: Disabling body language while speaking.